A judge in Washington state has blocked video evidence that’s been “AI-enhanced” from being submitted in a triple murder trial. And that’s a good thing, given the fact that too many people seem to think applying an AI filter can give them access to secret visual data.

Judge Leroy McCullough in King County, Washington wrote in a new ruling that AI tech used, “opaque methods to represent what the AI model ‘thinks’ should be shown,” according to a new report from NBC News Tuesday. And that’s a refreshing bit of clarity about what’s happening with these AI tools in a world of AI hype.

“This Court finds that admission of this Al-enhanced evidence would lead to a confusion of the issues and a muddling of eyewitness testimony, and could lead to a time-consuming trial within a trial about the non-peer-reviewable-process used by the AI model,” McCullough wrote.

The case involves Joshua Puloka, a 46-year-old accused of killing three people and wounding two others at a bar just outside Seattle in 2021. Lawyers for Puloka wanted to introduce cellphone video captured by a bystander that’s been AI-enhanced, though it’s not clear what they believe could be gleaned from the altered footage.

Puloka’s lawyers reportedly used an “expert” in creative video production who’d never worked on a criminal case before to “enhance” the video. The AI tool this unnamed expert used was developed by Texas-based Topaz Labs, which is available to anyone with an internet connection.

The introduction of AI-powered imaging tools in recent years has led to widespread misunderstandings about what’s possible with this technology. Many people believe that running a photo or video through AI upscalers can give you a better idea of the visual information that’s already there. But, in reality, the AI software isn’t providing a clearer picture of information present in the image—the software is simply adding information that wasn’t there in the first place.

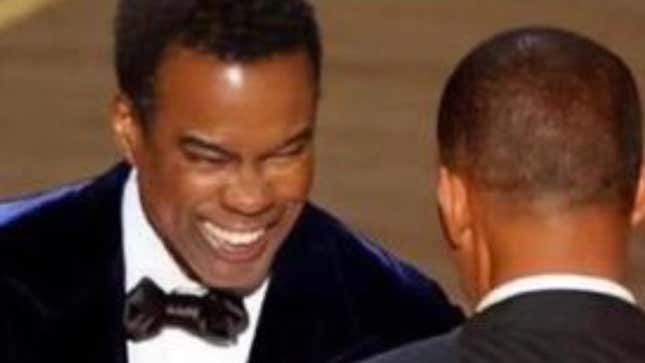

For example, there was a widespread conspiracy theory that Chris Rock was wearing some kind of face pad when he was slapped by Will Smith at the Academy Awards in 2022. The theory started because people started running screenshots of the slap through image upscalers, believing they could get a better look at what was happening.

But that’s not what happens when you run images through AI enhancement. The computer program is just adding more information in an effort to make the image sharper, which can often distort what’s really there. Using the slider below, you can see the pixelated image that went viral before people started feeding it through AI programs and “discovered” things that simply weren’t there in the original broadcast.

Countless high-resolution photos and video from the incident show conclusively that Rock didn’t have a pad on his face. But that didn’t stop people from believing they could see something hidden in plain sight by “upscaling” the image to “8K.”

The rise of products labeled as AI has created a lot of confusion among the average person about what these tools can really accomplish. Large language models like ChatGPT have convinced otherwise intelligent people that these chatbots are capable of complex reasoning when that’s simply not what’s happening under the hood. LLMs are essentially just predicting the next word it should spit out to sound like a plausible human. But because they do a pretty good job of sounding like humans, many users believe they’re doing something more sophisticated than a magic trick.

And that seems like the reality we’re going to live with as long as billions of dollars are getting poured into AI companies. Plenty of people who should know better believe there’s something profound happening behind the curtain and are quick to blame “bias” and guardrails being too strict. But when you dig a little deeper you discover these so-called hallucinations aren’t some mysterious force enacted by people who are too woke, or whatever. They’re simply a product of this AI tech not being very good at its job.

Thankfully, a judge in Washington has recognized this tech isn’t capable of providing a better picture. Though we don’t doubt there are plenty of judges around the U.S. who have bought into the AI hype and don’t understand the implications. It’s only a matter of time before we get an AI-enhanced video used in court that doesn’t show anything but visual information added well after the fact.

Trending Products

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel, Adjustable I/O & Fully Ventilated Airflow, Black (MCB-Q300L-KANN-S00)

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel, 120mm Aura Addressable RGB Fan, Headphone Hanger,360mm Radiator, Gundam Edition

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH Handle

be quiet! Pure Base 500DX ATX Mid Tower PC case | ARGB | 3 Pre-Installed Pure Wings 2 Fans | Tempered Glass Window | Black | BGW37

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass, aluminum frame, GPU braces, 420mm radiator support and Aura Sync